Building a Wire Diagram Classifier with Random Forests

In this blog post, we’ll walk through the process of building an image classification system that detects “wire diagrams” from a set of images using the Random Forest algorithm. The project aims to develop a classifier that can accurately differentiate between wire diagrams and other types of images. By the end of this tutorial, you will understand how to load image data, preprocess it, train a model, evaluate its performance, and save the model for future use.

Setting Up the Environment

Before diving into the code, installing the necessary Python libraries is important. For this project, we’ll use:

- Pillow: For opening, manipulating, and processing images.

- NumPy: For handling numerical data and arrays, which is critical when dealing with image data.

- scikit-learn: For building and training the machine learning model.

- Joblib: To save and load the trained model for future use.

Organizing the Image Data

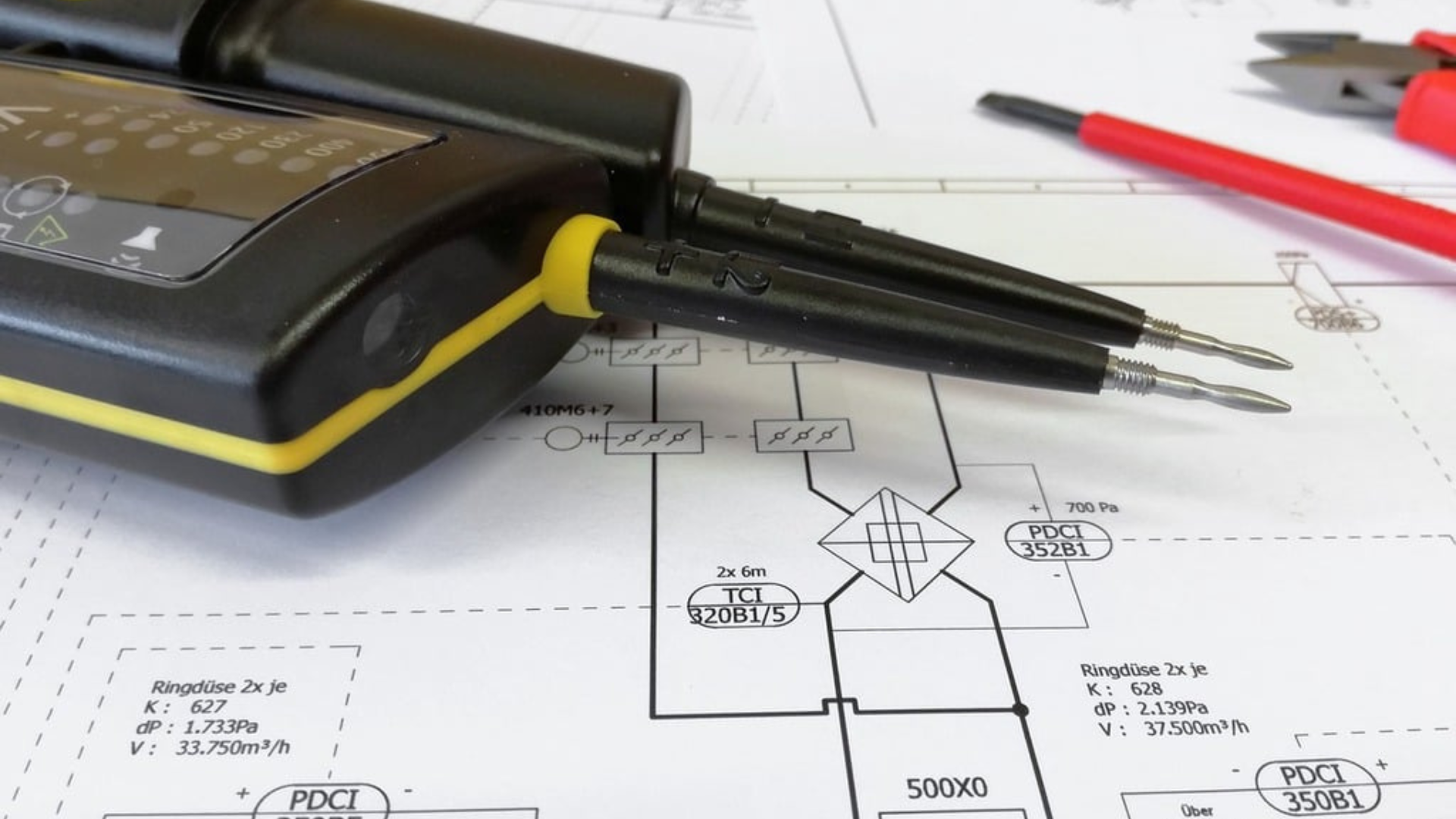

In machine learning, the first step is always to gather and prepare your data. For this project, we assume that the images are organized in folders, with each folder representing a class. We will be working with two classes:

- Wire Diagrams: Images that depict electrical or technical diagrams.

- Other Images: Any image that doesn’t fit into the “wire diagram” category.

Each of these classes has its own folder.

The goal is to load all the images and preprocess them for use in the machine learning model. For each image:

- We open the image, convert it to grayscale (which reduces complexity by eliminating color), and resize it to a standard size (500×500 pixels in this case). Resizing ensures that all images have the same dimensions, which is crucial for feeding them into the machine learning model.

- We then flatten the image. Since most machine learning algorithms expect a 1D array of numbers, we flatten the 2D pixel data into a single line, so each image becomes a vector of pixel values.

- Finally, we assign labels to the images: “1” for wire diagrams and “0” for other images. These labels will help the model learn to associate the features of wire diagrams with the correct category.

Preparing the Data for Training

Once the images are preprocessed, we need to convert them into NumPy arrays. This allows us to efficiently handle large datasets and perform mathematical operations on the image data. For example, the flattened images are stored in an array X, and their corresponding labels (0 or 1) are stored in an array y.

With the data prepared, the next step is to split it into training and testing sets. We typically use 80% of the data for training the model and reserve 20% for testing its performance. This allows us to evaluate how well the model generalizes to new, unseen data.

In this case, we use train_test_split from scikit-learn to randomly divide the dataset into training and test sets.

Training the Random Forest Classifier

Now that the data is ready, we can start building our model. In this project, we use a Random Forest classifier, which is an ensemble method that combines multiple decision trees to make predictions.

The Random Forest algorithm is particularly well-suited for image classification tasks because it can handle high-dimensional data (like images) and provide robust predictions. Each decision tree in the forest makes a prediction based on a subset of features, and the final prediction is determined by aggregating the results from all the trees.

We train the Random Forest model using the RandomForestClassifier class from scikit-learn. The model is trained on the training set, and the algorithm learns to associate patterns in the image data with the correct labels (wire diagram or not).

Evaluating Model Performance

After training the model, it’s important to evaluate its performance using the test set. The test set is made up of data that the model hasn’t seen before, so it serves as a good indicator of how well the model can generalize to new, unseen images.

To evaluate the model, we use the accuracy metric. Accuracy measures the percentage of correct predictions made by the model. It’s a simple but effective way to gauge the model’s overall performance.

If the accuracy is high, it indicates that the model has learned the correct patterns from the training data and is performing well on unseen data.

Saving the Model for Future Use

Once we’re satisfied with the model’s performance, we save it to a file using joblib. This allows us to load the model later without needing to retrain it. Saving the model is important because training a machine learning model can be time-consuming, especially with large datasets. By saving the trained model, we can quickly load it and use it to make predictions on new data.

joblib is a popular library for serializing Python objects like machine learning models. The saved model file can be shared with others or deployed in production environments where real-time predictions are needed.

Transform Your Business with Machine Learning

Are you looking to build a custom machine learning model for your business or project? At Arivelm Technologies, we specialize in delivering innovative solutions that drive success. Whether it’s image classification, predictive analytics, or process automation, our team of experts can help you design and implement the best strategies tailored to your needs.

Visit Arivelm.com to explore how we can work together to take your business to the next level. Contact us today for a consultation and let’s start building the future together!